- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

You can instantly get whatever you want, only it’s made from 100% technical debt

That estimate seems a little low to me. It’s at least 115%.

even more. The first 100% of the tech debt is just understanding “your own” code.

Just start again from scratch for every feature 🤣

deleted by creator

When did you get diagnosed?

He’s got that ol’ New York City Metropolitan Area Transit Authority Blues again, momma!

OpenTTD is a good game.

deleted by creator

That’s the thing, it’s a useful assistant for an expert who will be able to verify any answers.

It’s a disaster for anyone who’s ignorant of the domain.

Tell me about it. I teach a python class. Super basic, super easy. Students are sometimes idiots, but if they follow the steps, most of them should be fine. Sometimes I get one who thinks they can just do everything with chatgpt. They’ll be working on their final assignment and they’ll ask me what a for loop is for. Than I look at their code and it looks like Sanscrit. They probably haven’t written a single line of code in those weeks.

If you’re having to do repetitive shit, you might reconsider your approach.

I’ve tried this, to convert a large json file to simplified yaml. It was riddled with hallucinations and mistakes even for this simple, deterministic, verifiable task.

Depending on the situation, repetitive shit might be unavoidable

Usually you can solve the issue by using regex, but regex can be difficult to work with as well

Skill issue…

I turned on copilot in VSCode for the first time this week. The results so far have been less than stellar. It’s batting about .100 in terms of completing code the way I intended. Now, people tell me it needs to learn your ways, so I’m going to give it a chance. But one thing it has done is replaced the normal auto-completion which showed you what sort of arguments a function takes with something that is sometimes dead wrong. Like the code will not even compile with the suggested args.

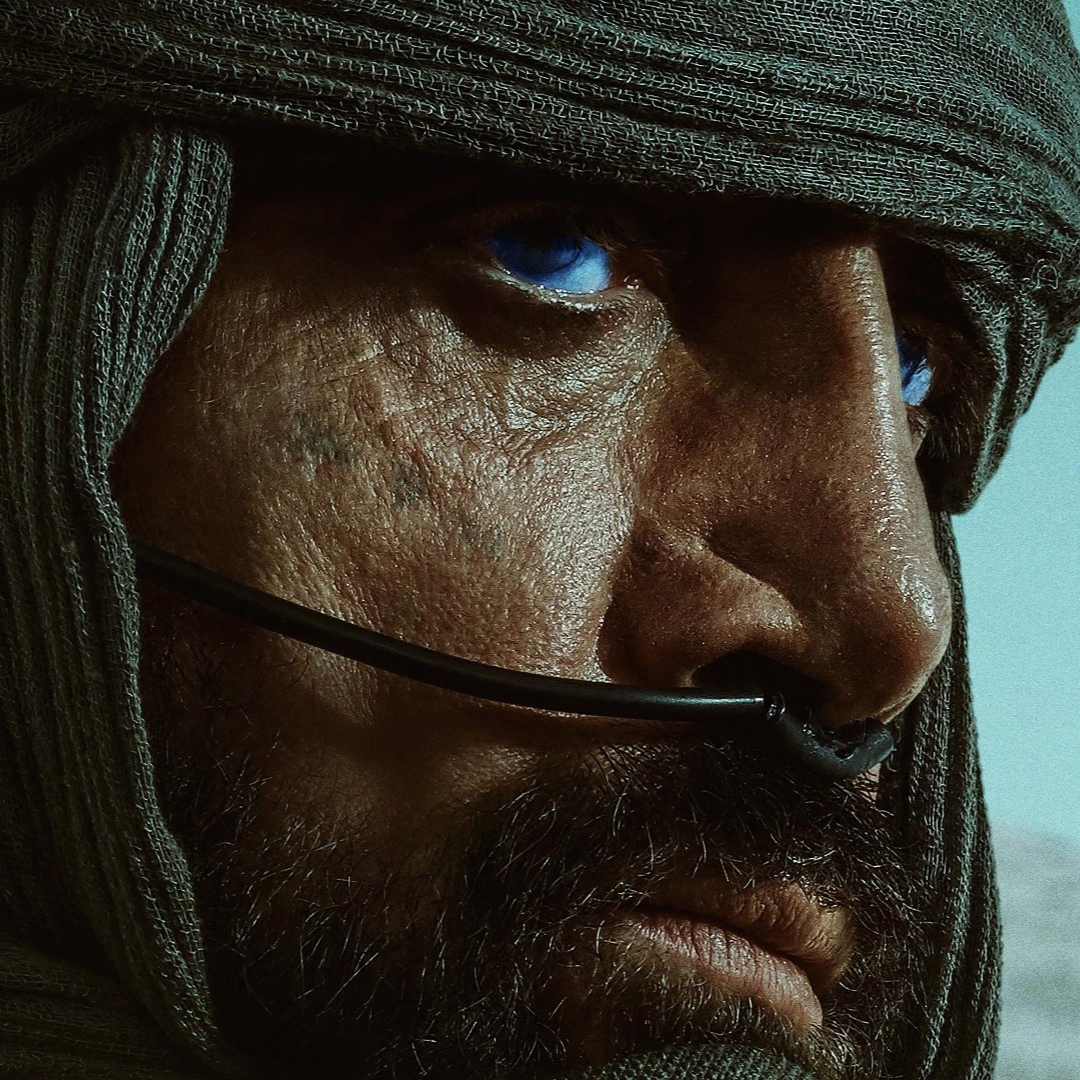

It also has a knack for making me forget what I was trying to do. It will show me something like the left side picture with a nice rail stretching off into the distance when I had intended it to turn, and then I can’t remember whether I wanted to go left or right? I guess it’s just something you need to adjust to. Like you need to have a thought fairly firmly in your mind before you begin typing so that you can react to the AI code in a reasonable way? It may occasionally be better than what you have it mind, but you need to keep the original idea in your head for comparison purposes. I’m not good at that yet.

I haven’t personally used it, but my coworker said using Cursor with the newest Claude model is a gamechanger and he can’t go back anymore 🤷♂️ he hasn’t really liked anything outside of cursor yet

Thanks, I’ll give that a shot.

deleted by creator

Try Roocode or Cline with the Claude3.7 model. It’s pretty slick, way better than Copilot. Turn on Memory Bank for larger projects to reduce the cost of tokens.

I knocked off an android app in Flutter/Dart/Supabase in about a week of evenings with Claude. I have never used Flutter before, but I know enough coding to fix things and give good instructions about what I want.

It would even debug my android test environment for me and wrote automated tests to debug the application, as well as spit out the compose files I needed to set up the Supabase docker container and SQL queries to prep the database and authentication backend.

That was using 3.5Sonnet, and from what I’ve seen of 3.7, it’s way better. I think it cost me about $20 in tokens. I’ve never used AI to code anything before, this was my first attempt. Pretty cool.

I used 3.7 on a project yesterday (refactoring to use a different library). I provided the documentation and examples in the initial context and it re-factored the code correctly. It took the agent about 20 minutes to complete the re-write and it took me about 2 hours to review the changes. It would have taken me the entire day to do the changes manually. The cost was about $10.

It was less successful when I attempted to YOLO the rest of my API credits by giving it a large project (using langchain to create an input device that uses local AI to dictate as if it were a keyboard). Some parts of the codes are correct, the langchain stuff is setup as I would expect. Other parts are simply incorrect and unworkable. It’s assuming that it can bind global hotkeys in Wayland, configuration required editing python files instead of pulling from a configuration file, it created install scripts instead of PKGBUILDs, etcetc.

I liken it to having an eager newbie. It doesn’t know much, makes simple mistakes, but it can handle some busy work provided that it is supervised.

I’m less worried about AI taking my job then my job turning into being a middle-manager for AI teams.

It’s taken me a while to learn how to use it and where it works best but I’m coming around to where it fits.

Just today i was doing a new project, i wrote a couple lines about what i needed and asked for a database schema. It looked about 80% right. Then asked for all the models for the ORM i wanted and it did that. Probably saved an hour of tedious typing.

deleted by creator

I assume we’re talking about software testing? I’d like to know more about:

-

The meaning of negative and positive tests in this context

-

Good examples of badly done negative tests by LLMs

deleted by creator

Thank you for thoroughly explaining this. Your explanations make good sense to me.

-

And then 12 hours spent debugging and pulling it apart.

And if you need anything else, you have to use a new prompt which will generate a brand new application, it’s fun!

And it still doesn’t work. Just “mostly works”.

A bunch of superfluous code that you find does nothing.

We’re in trouble when it learns to debug.

But then, as now, it won’t understand what it’s supposed to do, and will merely attempt to apply stolen code - ahem - training data in random permutations until it roughly matches what it interprets the end goal to be.

We’ve moved beyond a thousand monkeys with typewriters and a thousand years to write Shakespeare, and have moved into several million monkeys with copy and paste and only a few milliseconds to write “Hello, SEGFAULT”

I personally find copilot is very good at rigging up test scripts based on usings and a comment or two. Babysit it closely and tune the first few tests and then it can bang out a full unit test suite for your class which allows me to focus on creative work rather than toil.

It can come up with some total shit in the actual meat and potatoes of the code, but boilerplate stuff like tests it seems pretty spot on.

I believe that, because test scripts tend to involve a lot of very repetitive code, and it’s normally pretty easy to read that code.

Still, I would bet that out of 1000 tests it writes, at least 1 will introduce a subtle logic bug.

Imagine you hired an intern for the summer and asked them to write 1000 tests for your software. The intern doesn’t know the programming language you use, doesn’t understand the project, but is really, really good at Googling stuff. They search online for tests matching what you need, copy what they find and paste it into their editor. They may not understand the programming language you use, but they’ve read the style guide back to front. They make sure their code builds and runs without errors. They are meticulous when it comes to copying over the comments from the tests they find and they make sure the tests are named in a consistent way. Eventually you receive a CL with 1000 tests. You’d like to thank the intern and ask them a few questions, but they’ve already gone back to school without leaving any contact info.

Do you have 1000 reliable tests?

Not to be that guy, but the image with all the traintracks might just be doing it’s job perfectly.

Engineers love moving parts, known for their reliability and vigor

Vigor killed me

The one on the right prints “hello world” to the terminal

And takes 5 seconds to do it

That’s the problem. Maybe it is.

Maybe the code the AI wrote works perfectly. Maybe it just looks like how perfectly working code is supposed to look, but doesn’t actually do what it’s supposed to do.

To get to the train tracks on the right, you would normally have dozens of engineers working over probably decades, learning how the old system worked and adding to it. If you’re a new engineer and you have to work on it, you might be able to talk to the people who worked on it before you and find out how their design was supposed to work. There may be notes or designs generated as they worked on it. And so-on.

It might take you months to fully understand the system, but whenever there’s something confusing you can find someone and ask questions like “Where did you…?” and “How does it…?” and “When does this…?”

Now, imagine you work at a railroad and show up to work one day and there’s this whole mess in front of you that was laid down overnight by some magic railroad-laying machine. Along with a certificate the machine printed that says that the design works. You can’t ask the machine any questions about what it did. Or, maybe you can ask questions, but those questions are pretty useless because the machine isn’t designed to remember what it did (although it might lie to you and claim that it remembers what it did).

So, what do you do, just start running trains through those tracks, assured that the machine probably got things right? Or, do you start trying to understand every possible path through those tracks from first principles?

It gives you the right picture when you asked for a single straight track on the prompt. Now you have to spend 10 hours debugging code and fixing hallucinations of functions that don’t exist on libraries it doesn’t even neet to import.

Not a developer. I just wonder about AI hallucinations come about. Is it the ‘need’ to complete the task requested at the cost of being wrong?

No, it’s just that it doesn’t know if it’s right or wrong.

How “AI” learns is they go through a text - say blog post - and turn it all into numbers. E.g. word “blog” is 5383825526283. Word “post” is 5611004646463. Over huge amount of texts, a pattern is emerging that the second number is almost always following the first number. Basically statistics. And it does that for all the words and word combinations it found - immense amount of text are needed to find all those patterns. (Fun fact: That’s why companies like e.g. OpenAI, which makes ChatGPT need hundreds of millions of dollars to “train the model” - they need enough computer power, storage, memory to read the whole damn internet.)

So now how do the LLMs “understand”? They don’t, it’s just a bunch of numbers and statistics of which word (turned into that number, or “token” to be more precise) follows which other word.

So now. Why do they hallucinate?

How they get your question, how they work, is they turn over all your words in the prompt to numbers again. And then go find in their huge databases, which words are likely to follow your words.

They add in a tiny bit of randomness, they sometimes replace a “closer” match with a synonym or a less likely match, so they even seen real.

They add “weights” so that they would rather pick one phrase over another, or e.g. give some topics very very small likelihoods - think pornography or something. “Tweaking the model”.

But there’s no knowledge as such, mostly it is statistics and dice rolling.

So the hallucination is not “wrong”, it’s just statisticaly likely that the words would follow based on your words.

Did that help?

Thanks. If I understood that correctly doesn’t sound like AI to me.

Might is the important here

While being more complex and costly to maintain

Depends on the usecase. It’s most likely at a trainyard or trainstation.

The image implies that the track on the left meets the use case criteria

Im looking forward in the next 2 years when AI apps are in the wild and I get to fix them lol.

As a SR dev, the wheel just keeps turning.

I’m being pretty resistant about AI code Gen. I assume we’re not too far away from “Our software product is a handcrafted bespoke solution to your B2B needs that will enable synergies without exposing your entire database to the open web”.

The right one is after 10+ hours of debugging.

God, seriously. Recently I was iterating with copilot for like 15 minutes before I realized that it’s complicated code changes could be reduced to an

ifstatement.AI can’t imagine an image full glass of wine because there are barely any images of that in any dataset out there. AI can’t think, just massage it’s dataset into something vaguely plausible.

And this is why I’ve never touched anything AI related

The key is identifying how to use these tools and when.

Local models like Qwen are a good example of how these can be used, privately, to automate a bunch of repetitive non-determistic tasks. However, they can spot out some crap when used mindlessly.

They are great for skett hing out software ideas though, ie try a 20 prompts for 4 versions, get some ideas and then move over to implementation.

It’s WYSIWYG all over again…

And of course the ai put rail signals in the middle.

Chain in, rail out. Always

!Factorio/Create mod reference if anyone is interested !<

Spoiler

Your spoiler didn’t work.

It depends. AI can help writing good code. Or it can write bad code. It depends on the developer’s goals.

LLMs can be great for translating pseudo code into real code or creating boiler plate or automating tedious stuff, but ChatGPT is terrible at actual software engineering.

Honestly I just use it for the boilerplate crap.

Fill in that yaml config, write those lua bindings that are just a sequence of lua_pushinteger(L, 1), write the params of my do string kind of stuff.

Saves me a ton of time to think about the actual structure.